In 5G time division duplex (TDD), uplink (UL) and downlink (DL) resources are separated in time, with different time slots used for DL and UL on the same frequency.

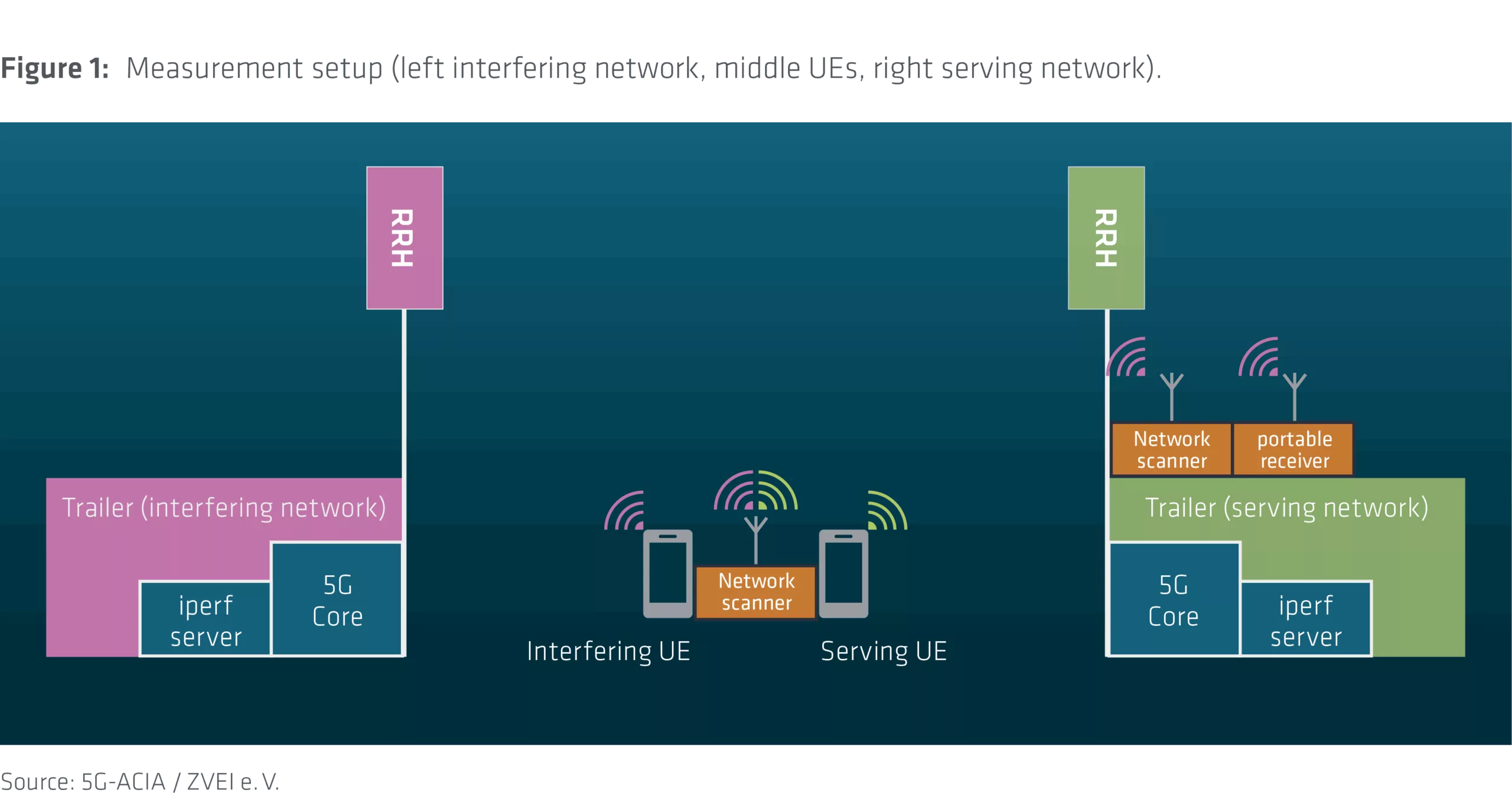

To quantify the effects of non-synchronized cells and the use of different TDD patterns in neighboring networks, Rohde & Schwarz, in collaboration with TU Dresden and Advancing Individual Networks GmbH (AIN), conducted a measurement campaign using mobile networks with full control over all network parameters.

During this campaign, the impact of non-synchronized networks on uplink and downlink throughput was measured and correlated with the Received Signal Strength Indicator (RSSI) and Reference Signal Received Power (RSRP) values at the interfered site. These measurements allow for the prediction of throughput reductions based on the RSRP level of the interfering neighboring site.

The campaign was conducted outdoors, with the neighboring networks positioned 50 meters apart, and no additional attenuation beyond free space loss. The base station antenna was aimed directly at the neighboring system to maximize interference.

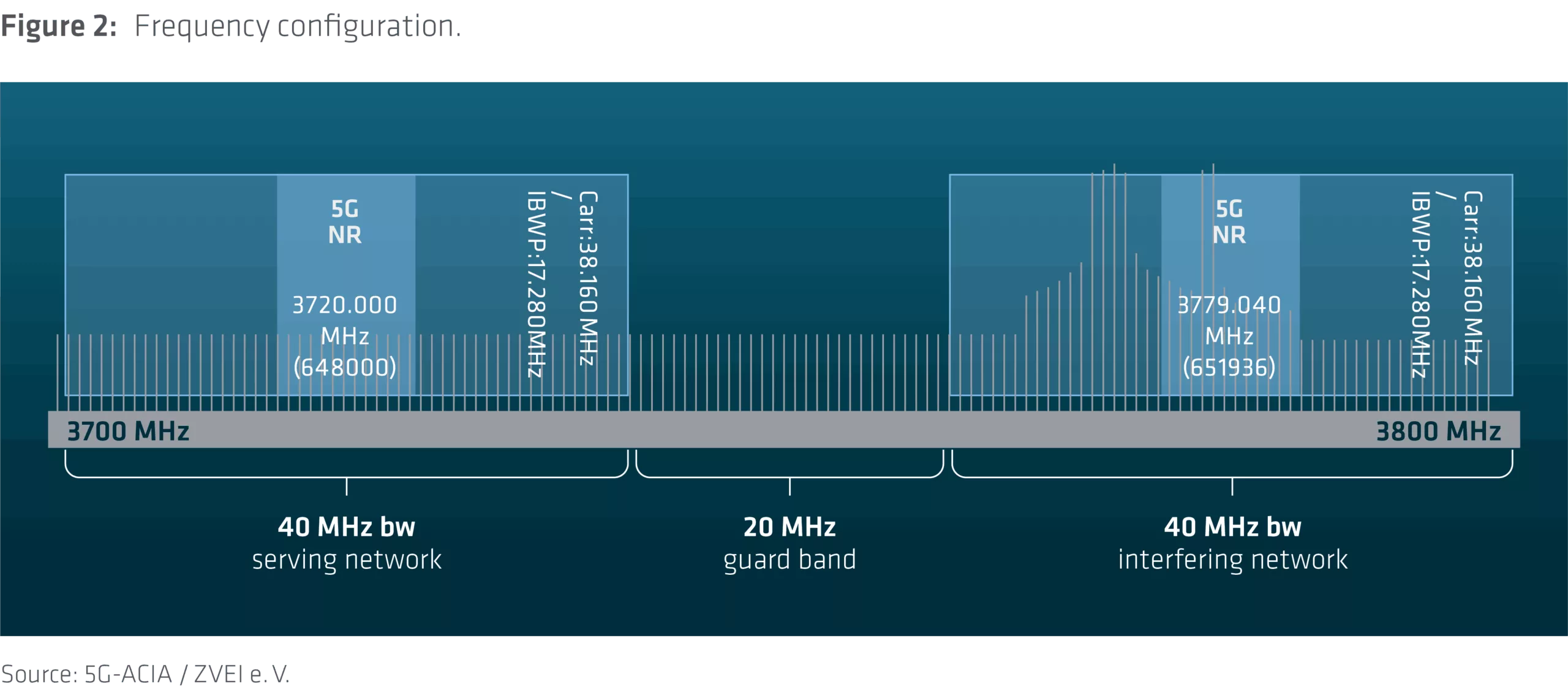

This subtopic presents results related to performance degradation caused by DL signal components from an interfering network operating in a different frequency band (even with a 20 MHz guard band). These signals can drive the base station receiver into non-linearity, causing intermodulation products.

Figures 1 and 2 show the measurement setup and frequency configuration.

The measurement campaign consists of two target cases:

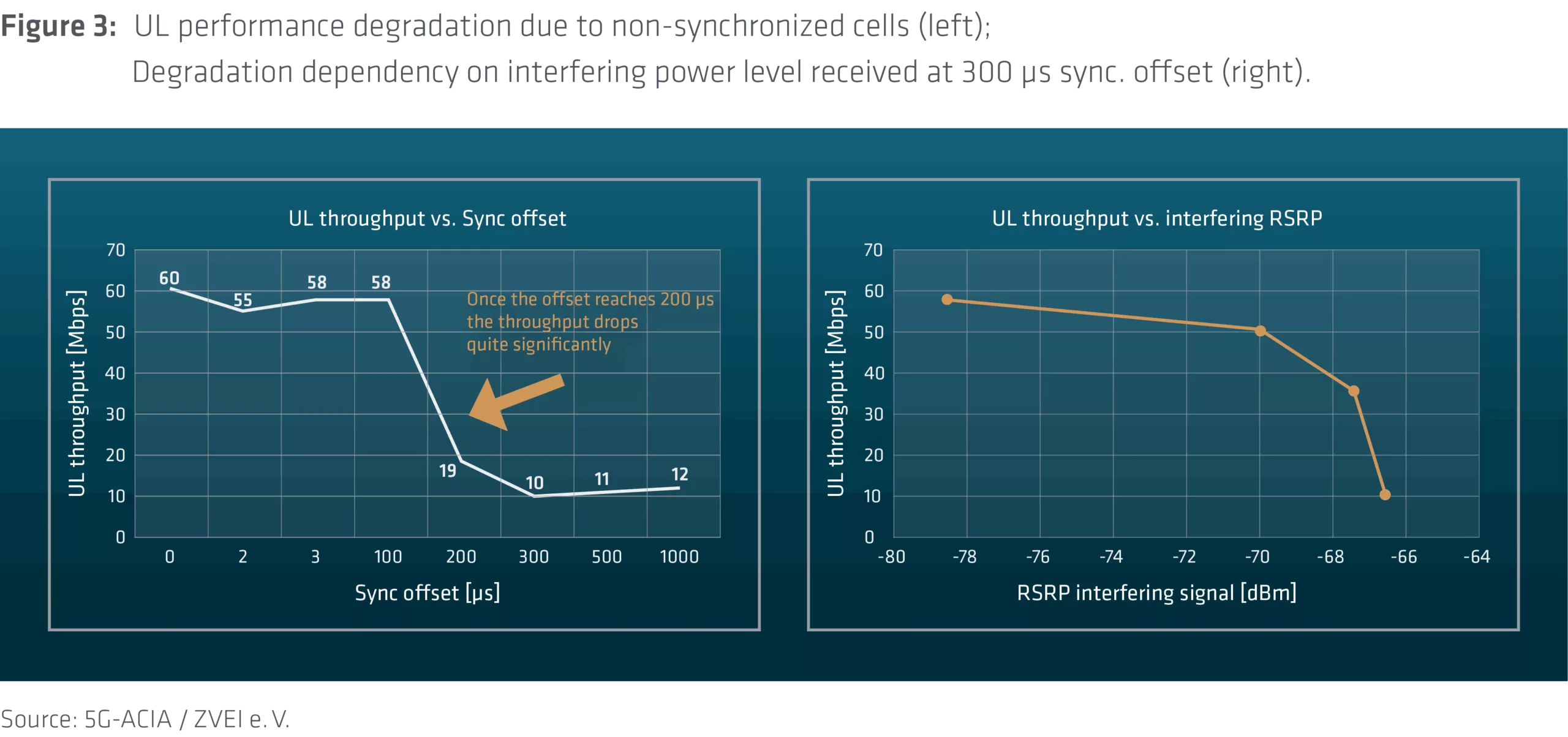

- For the “Unsynchronized networks” scenario (only relevant in accidental error cases, used as a reference), the synchronization offset was changed step by step for the interfering network during each measurement. The TDD patterns are the same in both networks.

- For the “Networks with different TDD patterns” scenario (relevant where NPNs shall use higher UL bandwidths), the serving network was configured with more UL slots, while the interfering network used the typical “DDDFU DDDFU” pattern. Both networks are synchronized.

The power levels of the interfering and serving networks are changed equally for each measurement, meaning the increase or decrease in transmission power is the same for both networks. Under the premise of equally strong networks, the influence on the throughput between the serving network and the serving UE resulting from the interfering power level is evaluated.

“Unsynchronized networks” scenario (only relevant in accidental error cases, used as a reference):

Figure 3 shows the UL performance degradation depending on the synchronization offset (left) and the interfering power level received at the serving base station receiver (right).

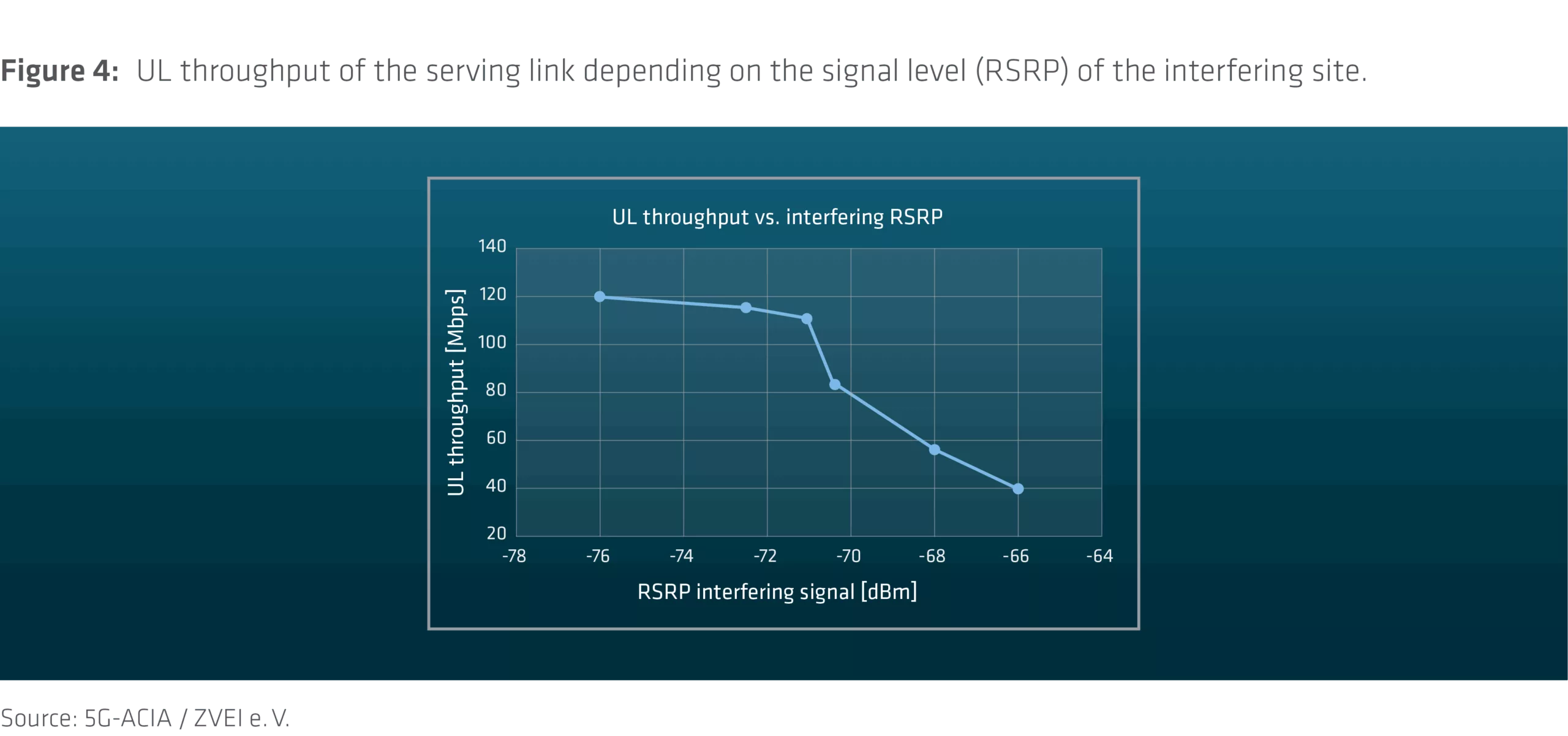

“Networks with different TDD patterns” scenario (relevant where NPNs shall use higher UL bandwidths):

Figure 4 shows the UL throughput of the serving link depending on the signal level (RSRP) of the interfering site, measured at the serving site.

At an interfering signal level of -76 dBm measured at the serving base station receiver, there is no degradation (120 Mbps was the maximum possible UL data throughput in the given scenario). However, a severe impact on the UL throughput is observed if the interfering signal level is higher. A 10 dB increase in interference level (from -76 dBm to -66 dBm RSRP) caused a two-thirds degradation in UL throughput (down to 40 Mbps).

Detailed explanation, analysis and root causes can be found in the document [1].

[1] Rohde & Schwarz, Educational Note 8NT18, “Performance degradation due to asynchronous 5G networks”, September 2024,

https://www.rohde-schwarz.com/appnote/8NT18